LangSmith

LangSmith is LangChain’s platform for debugging, testing, evaluating, and monitoring LLM applications.

Step 1: Get your LangSmith API key and Project name

In LangSmith, go to Settings > API Keys to create a new API key. Then navigate to your project or create a new one to get the project name.

Step 2: Enable Broadcast in OpenRouter

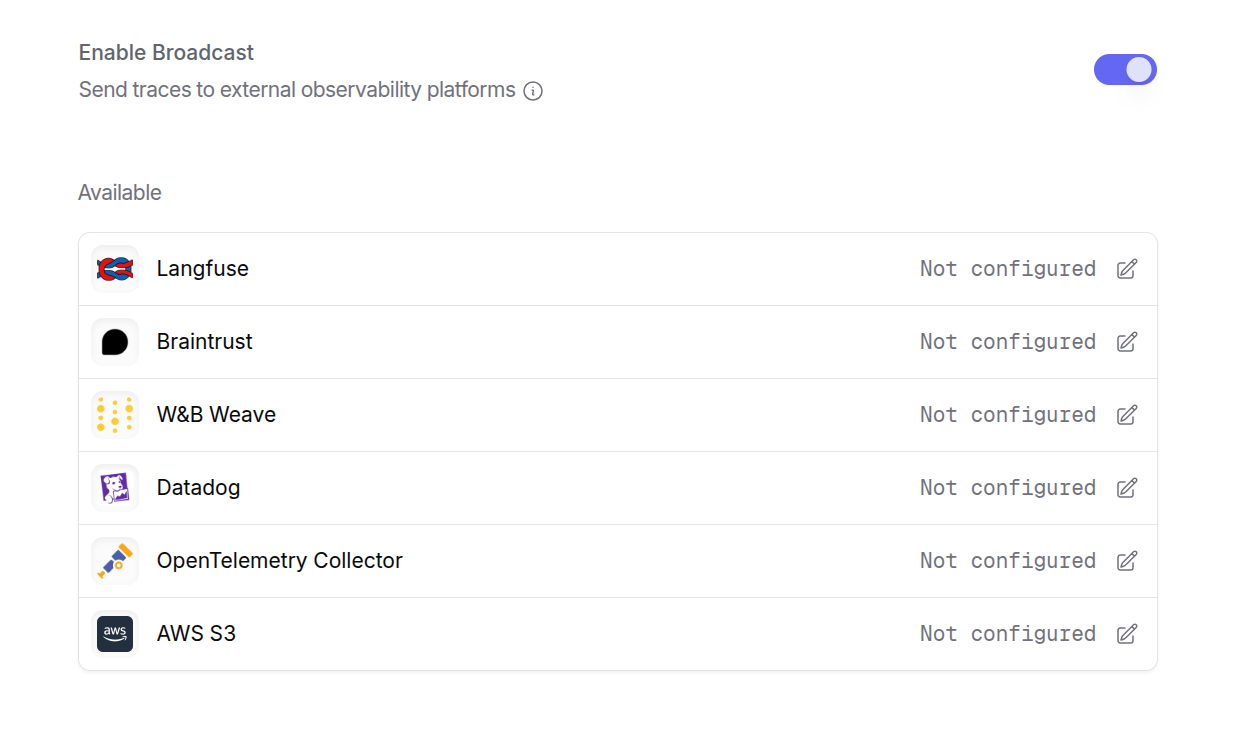

Go to Settings > Broadcast and toggle Enable Broadcast.

Step 3: Configure LangSmith

Click the edit icon next to LangSmith and enter:

- Api Key: Your LangSmith API key (starts with

lsv2_pt_...) - Project: Your LangSmith project name

- Endpoint (optional): Default is

https://api.smith.langchain.com. Change for self-hosted instances

Step 4: Test and save

Click Test Connection to verify the setup. The configuration only saves if the test passes.

Step 5: Send a test trace

Make an API request through OpenRouter and view the trace in LangSmith. Your traces will appear in the specified project with full details including:

- Input and output messages

- Token usage (prompt, completion, and total tokens)

- Cost information

- Model and provider information

- Timing and latency metrics

What data is sent

OpenRouter sends traces to LangSmith using the OpenTelemetry (OTEL) protocol with the following attributes:

- GenAI semantic conventions: Model name, token counts, costs, and request parameters

- LangSmith-specific attributes: Trace name, span kind, user ID, and custom metadata

- Error handling: Exception events with error types and messages when requests fail

LangSmith uses the OTEL endpoint at /otel/v1/traces for receiving trace data. This ensures compatibility with LangSmith’s native tracing infrastructure.

Custom Metadata

LangSmith supports trace hierarchies, tags, and custom metadata for organizing and analyzing your LLM calls.

Supported Metadata Keys

Tags

Any array of strings passed in metadata can be used as tags. Tags in LangSmith are comma-separated values that help you filter and organize traces.

Example

Run Types

OpenRouter maps observation types to LangSmith run types:

- GENERATION →

llmrun type - SPAN →

chainrun type - EVENT →

toolrun type

Additional Context

- The

userfield maps to LangSmith’s User ID - The

session_idfield maps to LangSmith’s Session ID for conversation tracking - Custom metadata keys are passed as span attributes and viewable in the run details

Privacy Mode

When Privacy Mode is enabled for this destination, prompt and completion content is excluded from traces. All other trace data — token usage, costs, timing, model information, and custom metadata — is still sent normally. See Privacy Mode for details.