Sentry

Sentry is an application monitoring platform that helps developers identify and fix issues in real-time. With Sentry’s AI monitoring capabilities, you can track LLM performance and errors.

Step 1: Get your Sentry OTLP endpoint and DSN

In Sentry, navigate to your project’s SDK setup:

- Log in to your Sentry account

- Go to Settings > Projects > [Your Project] > SDK Setup > Client Keys (DSN)

- Click on the OpenTelemetry tab

- Copy the OTLP Traces Endpoint URL (ends with

/v1/traces) - Copy your DSN from the same page

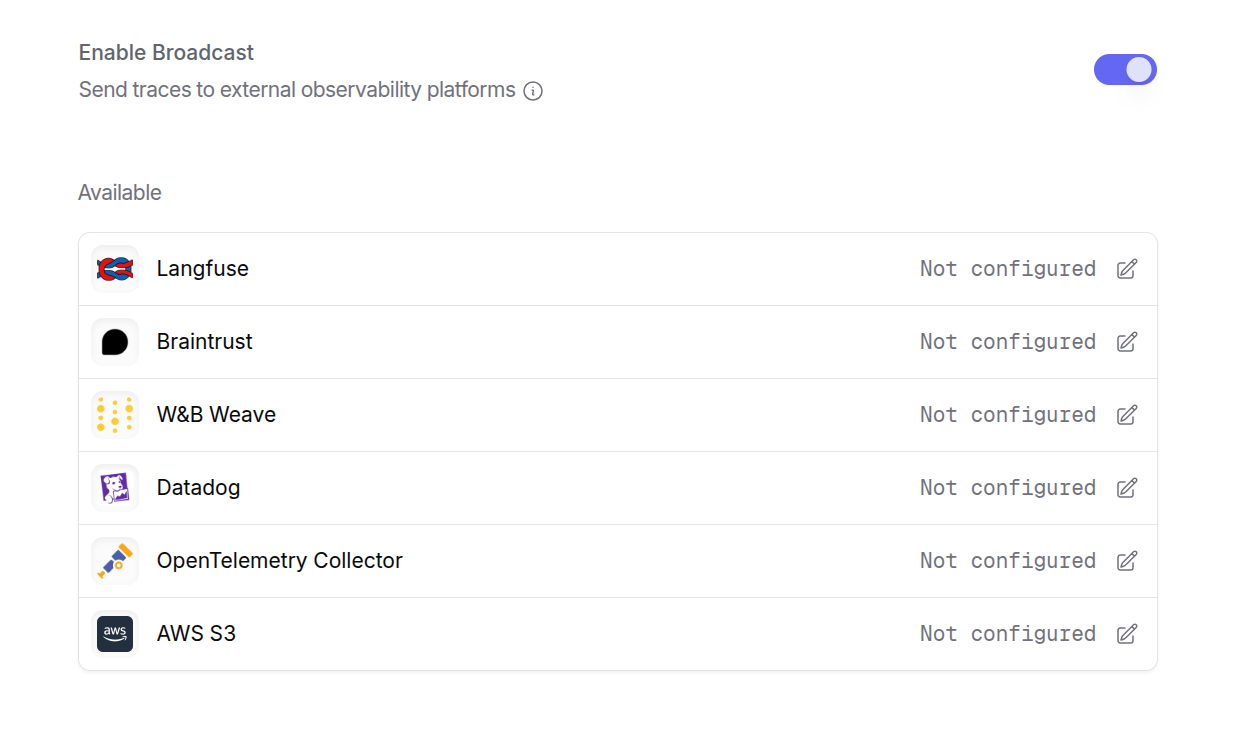

Step 2: Enable Broadcast in OpenRouter

Go to Settings > Broadcast and toggle Enable Broadcast.

Step 3: Configure Sentry

Click the edit icon next to Sentry and enter:

- OTLP Traces Endpoint: The OTLP endpoint URL from Sentry (e.g.,

https://o123.ingest.us.sentry.io/api/456/integration/otlp/v1/traces) - Sentry DSN: Your Sentry DSN (e.g.,

https://[email protected]/456)

Step 4: Test and save

Click Test Connection to verify the setup. The configuration only saves if the test passes.

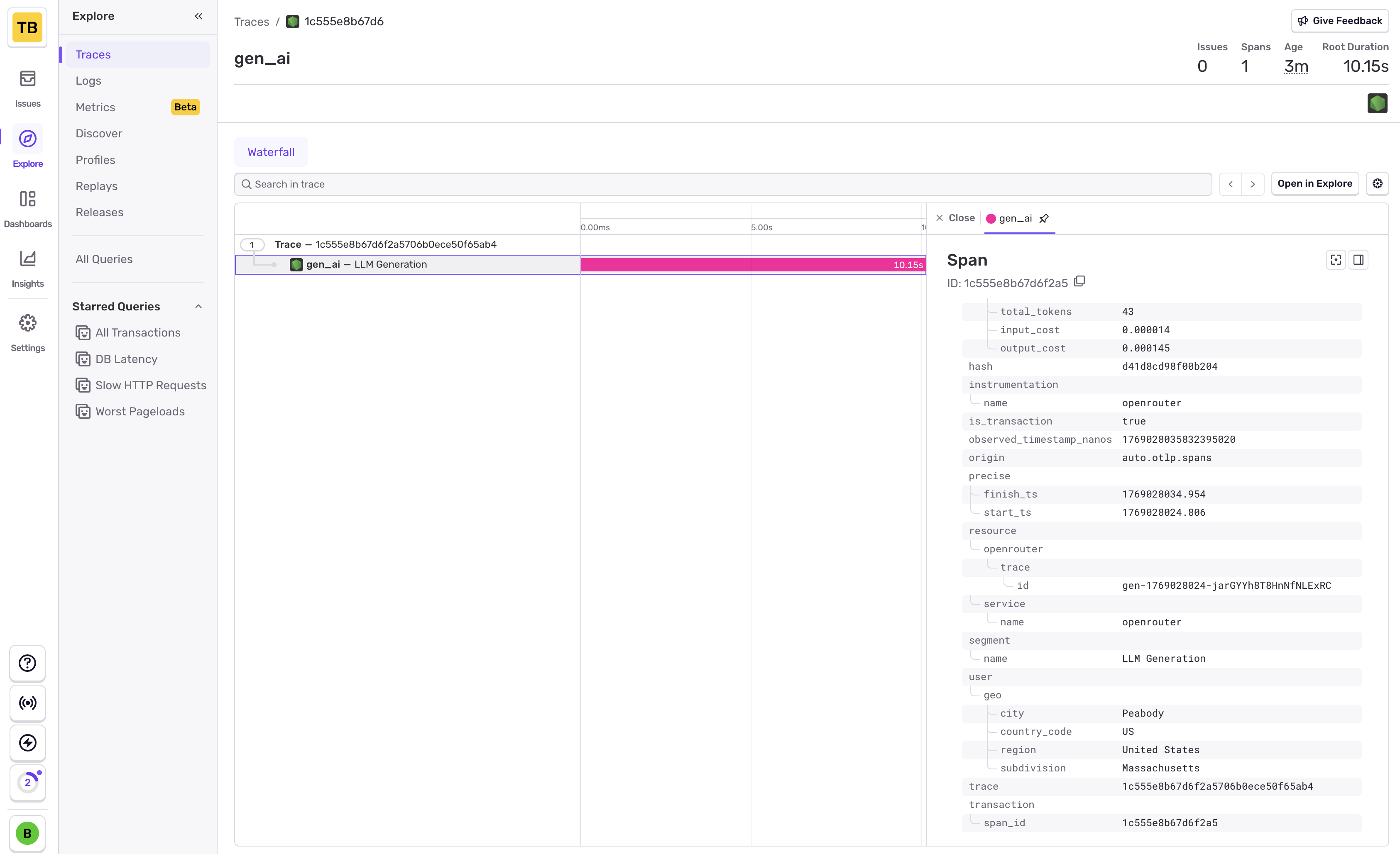

Step 5: Send a test trace

Make an API request through OpenRouter and view the trace in Sentry’s Performance or Traces view.

Sentry uses OpenTelemetry for trace ingestion. The OTLP endpoint and DSN are both required for proper authentication and trace routing.

Custom Metadata

Sentry receives traces via the OTLP protocol. Custom metadata from the trace field is sent as span attributes and can be used for filtering and analysis in Sentry’s Performance view.

Supported Metadata Keys

Example

Additional Context

- Custom metadata keys from

traceare included as span attributes under thetrace.metadata.*namespace - The

userfield maps touser.idin span attributes - The

session_idfield maps tosession.idin span attributes - Sentry automatically correlates LLM traces with your application’s existing error and performance data when using

parent_span_id

Privacy Mode

When Privacy Mode is enabled for this destination, prompt and completion content is excluded from traces. All other trace data — token usage, costs, timing, model information, and custom metadata — is still sent normally. See Privacy Mode for details.